I was instantly captivated by this song, much like many of you, and I was inspired to create my own unique AI-visual version of it.

Utilizing various tools such as Auto 1111 – Deforum and Blender, I crafted numerous versions before finally achieving one that felt right.

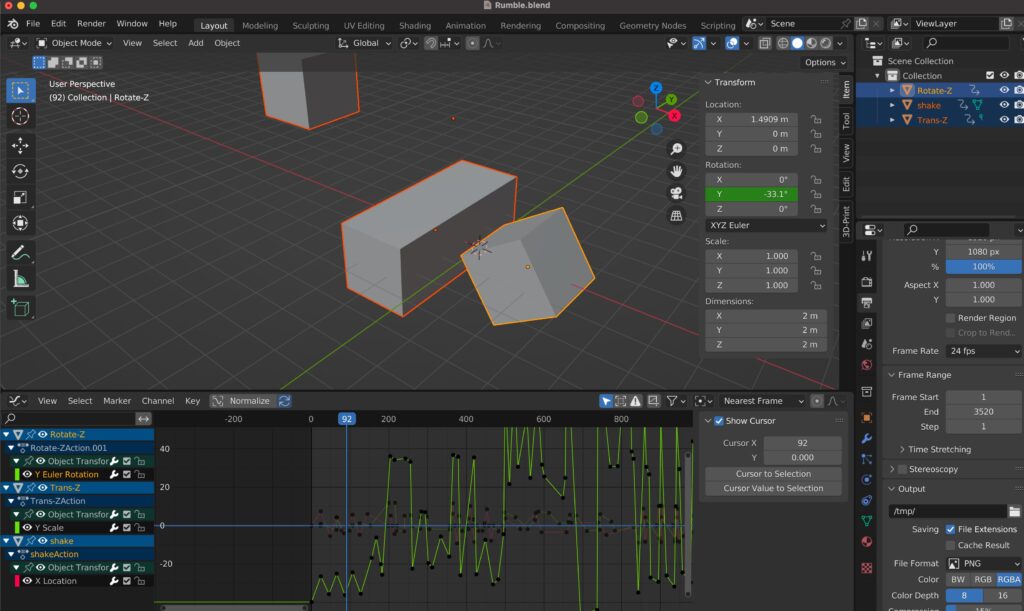

I employed Blender to generate keyframes from audio which then determined the trajectory of movement: moving forward or backwards (translated on the Z axis), and rotating left or right (rotation on the Z axis).

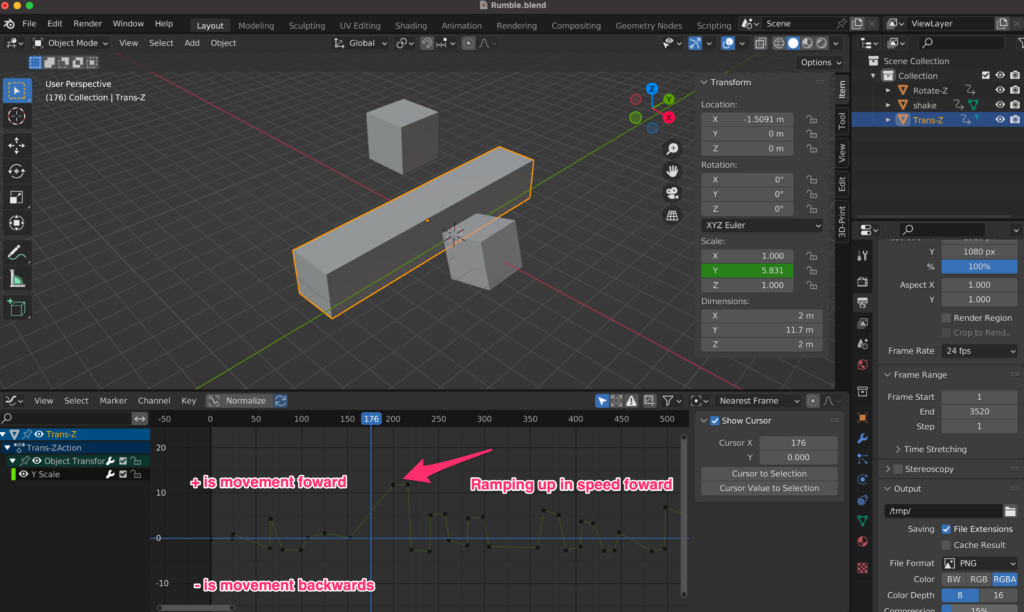

The concept entails baking your audio track into F-Curves on a primitive object, then leveraging the highly efficient graph editor in Blender to modify these curves. This is done by reducing the number of points through decimation and subsequently smoothing the curve. For forward motion in 3D space (translated on Z axis), I identify the points where the beat drops, then manipulate the graph editor to control the pace of the beat, creating acceleration or deceleration effects.

Using the graph editor, I tweak the original audio and manipulate sections of the song to direct the user’s movement. Positive values signal forward movement, while negative ones signify backward motion. The amplitude of the curve’s ramp indicates the velocity in either direction.

Typically, I distribute the desired movements across separate objects for a clearer visualization before rendering them in Deforum. For instance, I expanded and contracted a cube to indicate forward and backward movement in 3D space (translated on Z axis). For demonstrating rotations to the left or right, I animated another cube. Lastly, I incorporated some camera shake at key moments of the song through a final cube. This process is labor-intensive and requires substantial trial and error to perfect the desired movement.

Upon finalizing your movement, Blender’s scripting functions allow you to export your keyframes and conduct your tests. I always suggest starting with lower resolution tests to fine-tune your camera paths, before rendering at higher resolutions for the final video.

Though the process is demanding, you retain full control of your movements and can adjust them using Blender’s visual graph editor as required. While I have used Parseq for some rudimentary beat synchronization, I find the tool more complex due to its heavy reliance on advanced mathematics and in-depth knowledge for proficient usage. I aim to release one video per week using Parseq to refine my skills, but for longer music videos, I prefer the aforementioned technique for visually editing my camera paths.

Tools used:

Other tools to check out