Another Diffusion kid on the block that’s coming out and kicking ass is Stable Diffusion by Stability AI.

We are thrilled to have beta access and soon the code and model will be released to the public, which is always an amazing feat for companies to do, unlike others that are closed source and you have no idea what’s going on under the hood.

Stable Diffusion

Stable Diffusion is a text-to-image model that will empower billions of people to create stunning art within seconds. It is a breakthrough in speed and quality meaning that it can run on consumer GPUs. You can see some of the amazing output that has been created by this model without pre or post-processing on this page.

The model itself builds upon the work of the team at CompVis and Runway in their widely used latent diffusion model combined with insights from the conditional diffusion models by our lead generative AI developer Katherine Crowson, Dall-E 2 by Open AI, Imagen by Google Brain and many others. We are delighted that AI media generation is a cooperative field and hope it can continue this way to bring the gift of creativity to all.

–Stability AI Blog Post

You can watch an interview with Emad Mostaque the founder of Stability AI

Current Stable Diffusion Options (Beta)

usage: !dream [-h] [--height HEIGHT] [--width WIDTH] [--cfg_scale CFG_SCALE]

[--number NUMBER] [--separate-images] [--grid]

[--sampler SAMPLER] [--steps STEPS] [--seed SEED]

[--prior PRIOR]

[prompt ...]

positional arguments:

prompt

optional arguments:

-h, --help

--height HEIGHT, -H HEIGHT

[512] height of image (multiple of 64)

--width WIDTH, -W WIDTH

[512] width of image (multiple of 64)

--cfg_scale CFG_SCALE, -C CFG_SCALE

[7.0] CFG scale factor

--number NUMBER, -n NUMBER

[1] number of images

--separate-images, -i

Return multiple images as separate files.

--grid, -g Composite multiple images into a grid.

--sampler SAMPLER, -A SAMPLER

[k_lms] (ddim, plms, k_euler, k_euler_ancestral,

k_heun, k_dpm_2, k_dpm_2_ancestral, k_lms)

--steps STEPS, -s STEPS

[50] number of steps

--seed SEED, -S SEED random seed to use

--prior PRIOR, -p PRIOR

vector_adjust_priorStable Diffusion Tests

I really like playing around with Stable Diffusion, just like I did Mid Journey, however I really wasn’t a fan of Dall-E 2. For some reason I wasn’t able to really pull out the style of images I liked, and also felt their TOS was pretty stringent on what you can use the final images for.

My first few tests revolved around trying to generate female elf, cyberpunk, steampunk type of matte painting or digital paintings.

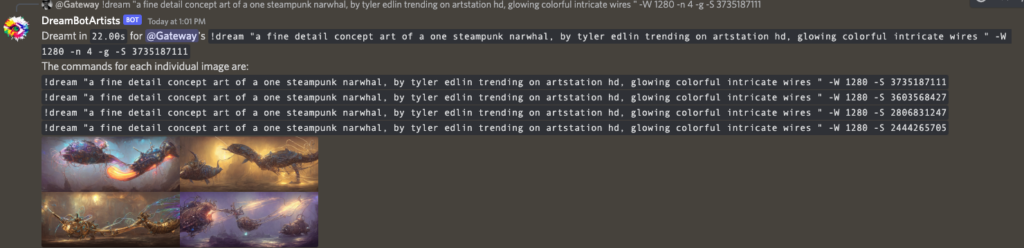

Stable Diffusion currently runs on a Discord server and all commands start with !dream “insert some prompt engineering text” -arguments.

Female Elf Warriors

Command line: !dream “a detailed matte painting of beautiful elf warrior princess with green eyes glowing runes craved into the wood of the bow slender female shape smooth face” -n 4 -g -S 988176512

-n Number of images, -g to use a grid, and -S seed value

You can see the various results get back and these are 512 x 512 blocks. I’m always trying to get the body to look good, including face, eyes, arms etc and be somewhat symmetrical. I think for my first try using SD (Stable Diffusion) was able to get some decent starting results.

When this is completed in discord you get the image back along with the 4 images prompts and the seed so you can then use that specific one to dig deeper, or try to build another 4 images.

If you want a specific image to be output from that block you can just copy and past from the reply with the 1st being top left, to top right, lower left to lower right.

I choose from the block of 4 female elf’s in the lower left to get a better image and then work on adjusting the prompt.

You can paste the following command into discord when you get access to SD.

!dream “a detailed matte painting of beautiful elf warrior princess with green eyes glowing runes craved into the wood of the bow slender female shape smooth face symmetry” -S 1681130093

I got this from the file name so hopefully it didn’t cut off the full prompt so just be aware of that and always keep a running spreadsheet or document of your tries (which I’m guilty of not doing with SD yet)

So what does some crazy random Steampunk prompt look like? Well I tried one of the artists I use for this style and low and behold I was blown away by the results.

Command: !dream “kazuhiko nakamura arte deviantart ilustracoes modelos 3d surreal steampunk cyberpunk sombrio bizarro detailed steampunk wires” -n 4 -g -S 479378722

So as you can see you can get some really trippy results, and I have been exploring doing more full body by changing the the height value to 704, however note you will get some times a double head (seen below), which again whatever they are doing under the hood is coming from a 512×512 model.

Command: !dream “a beautiful matte painting of a female with a slender body and half a steampunk face nice smile blue eye ornate colorful wires trending on arts…” -H 896 -n 4 -g -S 1846394090

Other Stable Diffusion Images

Here are a few other images that I have been working on.

So what do you guys think of Stable Diffusion? If you create something cool tag me on Twitter @DreamingComputr or Instagram

I cant wait to see what everyone comes up with!

Also check out this great google document for detailed explanations of each setting.